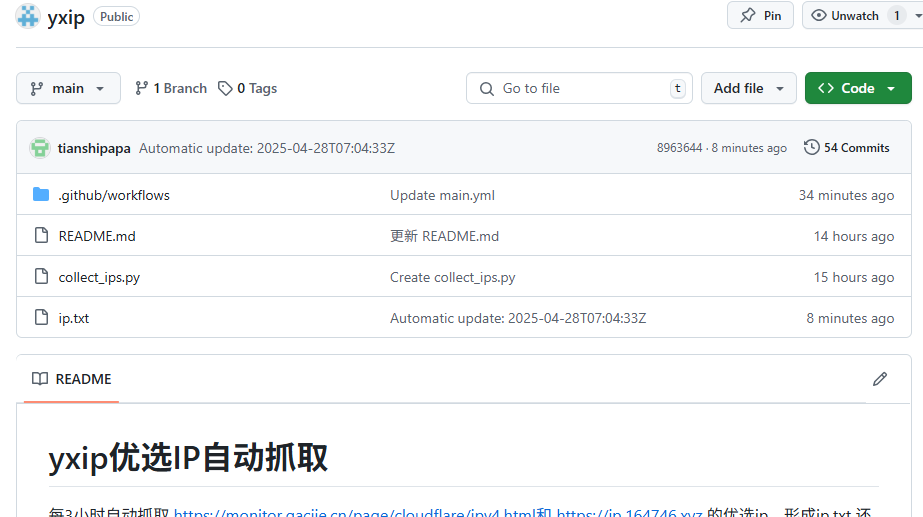

github项目:自动获取优选IP

1,在github里创建项目,例如yxip

2,在项目yxip里创建文件collect_ips.py,填入以下内容

1 | import requests |

3,在项目yxip里创建.github/workflows/main.yml文件,填入以下内容1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59name: Update IP List

on:

schedule:

- cron: '*/45 * * * *' # 每45分钟更新一次

workflow_dispatch:

push:

jobs:

update-ip-list:

runs-on: ubuntu-latest

permissions:

contents: write # 写入权限必须保留

steps:

# 关键修改点1:带token检出

- uses: actions/checkout@v3

with:

token: ${{ secrets.GITHUB_TOKEN }} # 注入认证信息

fetch-depth: 0 # 获取完整提交历史

# 关键修改点2:先同步最新代码

- name: Pre-sync repository

run: |

git config --global user.email "tianshideyou@proton.me"

git config --global user.name "tianshipapa"

git remote set-url origin https://x-access-token:${{ secrets.GITHUB_TOKEN }}@github.com/camel52zhang/yxip.git

git pull origin main # 普通拉取最新代码

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.9'

- name: Install dependencies

run: |

pip install requests beautifulsoup4

- name: Run script

run: python ${{ github.workspace }}/collect_ips.py

# 关键修改点3:智能提交逻辑

- name: Commit and push changes

run: |

# 检测文件变化

if [ -n "$(git status --porcelain -- ip.txt)" ]; then

git add ip.txt

git commit -m "Automatic update: $(date -u +'%Y-%m-%dT%H:%M:%SZ')"

# 带重试的推送机制

for i in {1..3}; do

git pull --rebase origin main && break || sleep 5

done

git push origin HEAD:main

echo "✅ 更新推送成功"

else

echo "🔄 未检测到IP变化"

fi

至此完成了从https://monitor.gacjie.cn/page/cloudflare/ipv4.html和https://ip.164746.xyz获取有些IP的目的,优选的IP保存在ip.txt文件里

假如感觉45分钟执行一次太频繁,可以按以下修改

schedule

2

3

- cron: '3 1,13 * * *' # 每天UTC 1:03和13:03

workflow_dispatch: # 保留手动触发